SONiC: The 900k$ Ethernet Switch Killer

From Servers to Switches: How Open Source Finally Conquered Networking

The Origins of Open Networking

In October 2010, a pivotal meeting took place among leading network architects from the world's largest cloud providers. James Hamilton, Chief Architect at Amazon Web Services, presented "Data Center Networks Are In My Way" alongside colleagues from Microsoft including Albert Greenberg and Dave Maltz, who led networking innovation at Microsoft Azure.

This presentation highlighted a critical problem: while data centers were scaling rapidly to meet growing cloud demands, networking equipment had become the primary bottleneck. Traditional networking gear - expensive, inflexible, and difficult to manage - was fundamentally limiting how cloud providers could grow their infrastructure.

The meeting marked a turning point in data center networking. These engineers identified that networking technology needed to evolve from proprietary, single-vendor systems to open, flexible platforms - similar to how server infrastructure had already transformed. Their insights eventually led to the development of open network operating systems like SONiC, which Microsoft would later contribute to the open-source community.

This presentation essentially served as the problem statement that would drive the next decade of innovation in data center networking architecture.

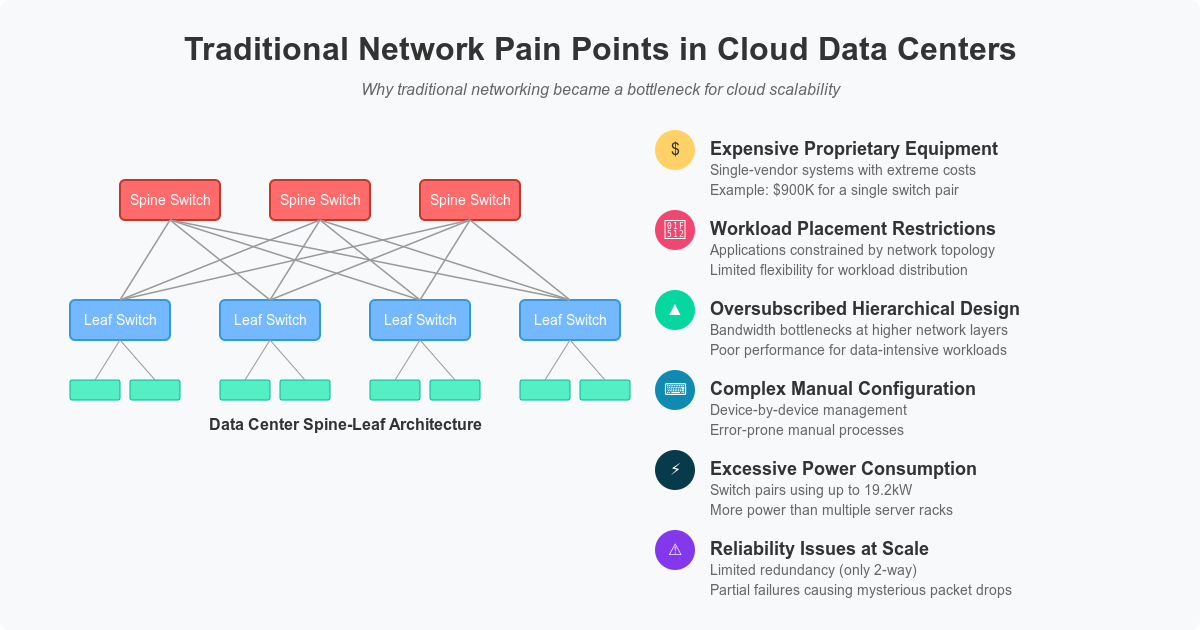

Why Traditional Networking Failed Cloud Data Centers?

James Hamilton's presentation identified several critical problems with traditional networking equipment that were severely limiting cloud infrastructure. These issues weren't just technical inconveniences—they were fundamental barriers to scaling cloud services efficiently, Let’s dive into the main problems—and why they matter:

Expensive Proprietary Equipment

Traditional network vendors like Cisco and Juniper sold complete systems where they controlled everything—the chips, hardware, software, and support. This approach meant:

Customers had to buy everything from a single company

Vendors could charge premium prices because components weren't interchangeable

Financial impact was substantial: The presentation noted a Juniper EX8216 switch costing $716,000 without optics and $908,000 with optics

Workload Placement Restrictions

Network architecture created significant constraints on where applications could run within a data center:

Applications that needed to communicate frequently had to be physically close due to bandwidth limitations between different parts of the network

Moving workloads between servers often required complex network reconfiguration

For example, database servers and the web servers that relied on them typically needed to remain in the same network segment, severely limiting operational flexibility

Pyramid-Style Bottlenecks

Traditional data center networks used a pyramid structure with reduced bandwidth at higher levels:

Traffic between different segments of the data center had to travel through oversubscribed "core" connections

This design couldn't support data-intensive workloads efficiently

Large-scale operations like MapReduce jobs (a process that crunches huge datasets across many servers) faced serious performance bottlenecks

Manual, Error-Prone Configurations

Networking equipment was managed device-by-device through complex command-line interfaces:

Each switch or router needed individual manual configuration

No central way to manage everything together

Errors in configuration were common and could cause major outages

Example: Updating the configuration on 100 switches might require logging into each one individually and typing the same commands 100 times

Power-Hungry Hardware

Network equipment used excessive power compared to its function:

The presentation notes that some switches consumed 19.2kW per pair

Entire racks of servers often used only 8-10kW

Example: A single network switch pair could use more power than two full racks of servers, despite the servers doing far more computational work

The Big Picture: A Broken Model

The fundamental insight was that these problems all stemmed from an outdated business model. While server infrastructure had evolved away from the old "mainframe" approach (where one vendor controlled everything) to an open model with standardized components, networking remained locked in the past. The next section will explore how this contrast pointed toward the solution that would eventually become SONiC.

How Servers Evolved Away from the Mainframe Model?

The contrast between networking and servers that Hamilton highlighted reveals a crucial historical shift in computing architecture.

The Old Mainframe Server Model (1960s-1980s)

In the mainframe era, companies like IBM controlled the entire technology stack:

They designed CPU chips, built hardware systems, and wrote operating systems

They developed applications, programming tools, and provided all support services

Buying an IBM mainframe meant complete lock-in to IBM's ecosystem, creating several problems:

High costs due to lack of competition at each layer

Slow innovation with limited incentives for improvement

Enormous switching costs giving vendors tremendous leverage

Restricted third-party software and tools ecosystem

The Big Change: How Servers Got Cheap and Flexible (1980s–2000s)

Four things changed everything:

CPU Standardization

Intel created a “common language” for computer chips (x86). Soon, AMD and others could make cheaper copies. Competition = better prices!

Cheap, Interchangeable Hardware

Companies like Dell started selling servers as basic building blocks. You could mix Dell servers with HP parts—like building a PC with parts from Amazon and Best Buy.

Open Operating Systems

Linux emerged as a hardware-independent, open-source option

Microsoft Windows Server provided another hardware-agnostic choice

Standard operating systems worked across different vendors' hardware

Rich Software Ecosystem

Developers started making software that worked on any server. Need a database? Just install it—no need to beg IBM for permission

Linux: The Game-Changer

Of all these shifts, Linux stole the show. This open-source hero wasn’t just another OS—it was a revolution:

Free Forever: No licensing fees, just download and go.

Customizable: Need it to do something special? Change the code yourself.

Universal: It ran on any server, no matter the Vendor.

Community-Powered: A global army of developers kept it improving.

Linux didn’t just cut costs—it broke the vendor stranglehold and gave businesses a platform they could actually control.

Servers vs. Networking: A Tale of Two Worlds

By 2010, when Hamilton presented these ideas, servers had transformed completely. They followed a new model where Hardware and Software could come from different sources.

Costs dropped

Speed doubled every 2 years (thanks to competition between Intel and AMD).

Flexibility exploded (you could mix Dell servers with HP storage and open-source software).

But Networks Were Still Stuck in the 1970s:

Cisco and Juniper acted like old-school mainframe companies:

They designed their own chips (ASICs).

They built their own hardware.

They wrote their own software (IOS & Junos).

They even controlled the tools to manage it all.

The Lightbulb Moment

The fix was obvious: Do what servers did.

Separate the hardware from the software

Create open interfaces between layers

Build on standardized components.

The Need for a New Network Operating System

While it was clear that networking needed to follow the same evolutionary path as servers, there was a major obstacle. Big networking companies like Cisco and Juniper were making a lot of money selling their all-in-one systems. They didn't want to change a business model that was highly profitable for them. The change would need to come from a different direction - from the companies that were buying and using these expensive network devices, not from those selling them.

The Birth of SONiC (Software for Open Networking in the Cloud)

Around 2013-2014, Microsoft Azure's network engineers began developing their own solution. Microsoft was in a unique position:

They were running massive data centers where the networking problems were costing them millions

They had deep experience building operating systems and software platforms

Microsoft decided to create a brand-new network operating system that would:

Be freely available as open-source software

Work on standard network switches from many manufacturers

Support network chips from different companies

Be easy to manage through modern interfaces

Handle the enormous scale of cloud data centers

This project became SONiC (Software for Open Networking in the Cloud), which Microsoft shared with the world through the Open Compute Project in 2016.

What is SONiC?

Simply put, SONiC is a specialized operating system for network hardware. But unlike traditional network systems, SONiC was built very differently:

Built on Linux: SONiC uses Debian Linux as its foundation - the same reliable operating system that runs millions of servers worldwide

Container-Based Design: Different networking functions (routing, switching, management) run in separate containers, making it easy to update or replace individual components without affecting the whole system

This means: If you want to upgrade just the routing function, you can replace that container without touching the rest of the system components

Central Database: Uses a Redis database to keep track of all network information, making it easier for all parts of the system to share information

For Example: Both the routing component and monitoring tools can access the same network data without conflicts

Switch Abstraction Interface (SAI): Creates a common language (APIs) for talking to different network chips

This means: The same SONiC code can run on switches using chips from Broadcom, Mellanox, or Intel without any changes

Open Source: Anyone can use, modify, and improve the code

This design directly addressed the old "everything from one vendor" problem by creating clear boundaries between different parts of the system and standard ways for these parts to work together.

This transformation in networking was about a decade behind the server revolution, but it followed the same fundamental pattern - breaking apart the vertically integrated stack to enable competition, innovation, and cost reduction at every layer.

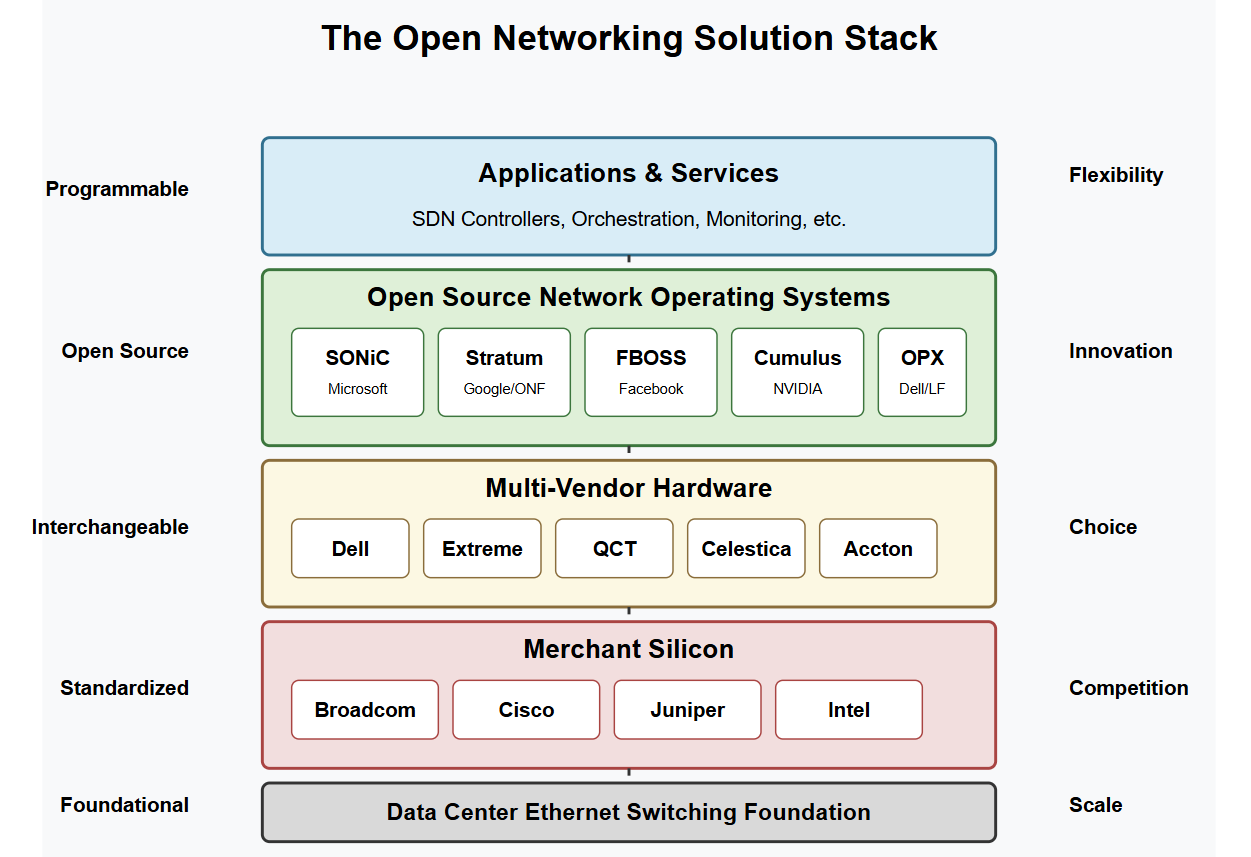

The Overall Open Networking Solution

The industry has converged on a comprehensive solution for open networking, particularly for data centers:

Data Center Ethernet switching solution

Based on merchant (open) silicon from different vendors like Broadcom, Cisco, Juniper, etc.

Switch Hardware is available from multiple vendors like Dell, Extreme, QCT, Celestica and Accton and other vendors.

Open-Source Network Operating System like SONiC with multiple distribution from different vendors:

Broadcom SONiC distribution is analogues to RED HAT Version of Linux where there is enterprise class features with full support.

Stratum Originally developed by Google now part of the Open Networking Foundation (ONF)

FBOSS developed by Facebook.

Cumulus Linux developed by Cumulus Networks (now part of NVIDIA)

OpenSwitch (OPX) developed by Dell, now maintained by the Linux Foundation.

Each of these operating systems represents a different approach to solving the same fundamental problem: breaking away from the traditional vendor-locked networking model to create more flexible, programmable network infrastructure based on open standards and interfaces.

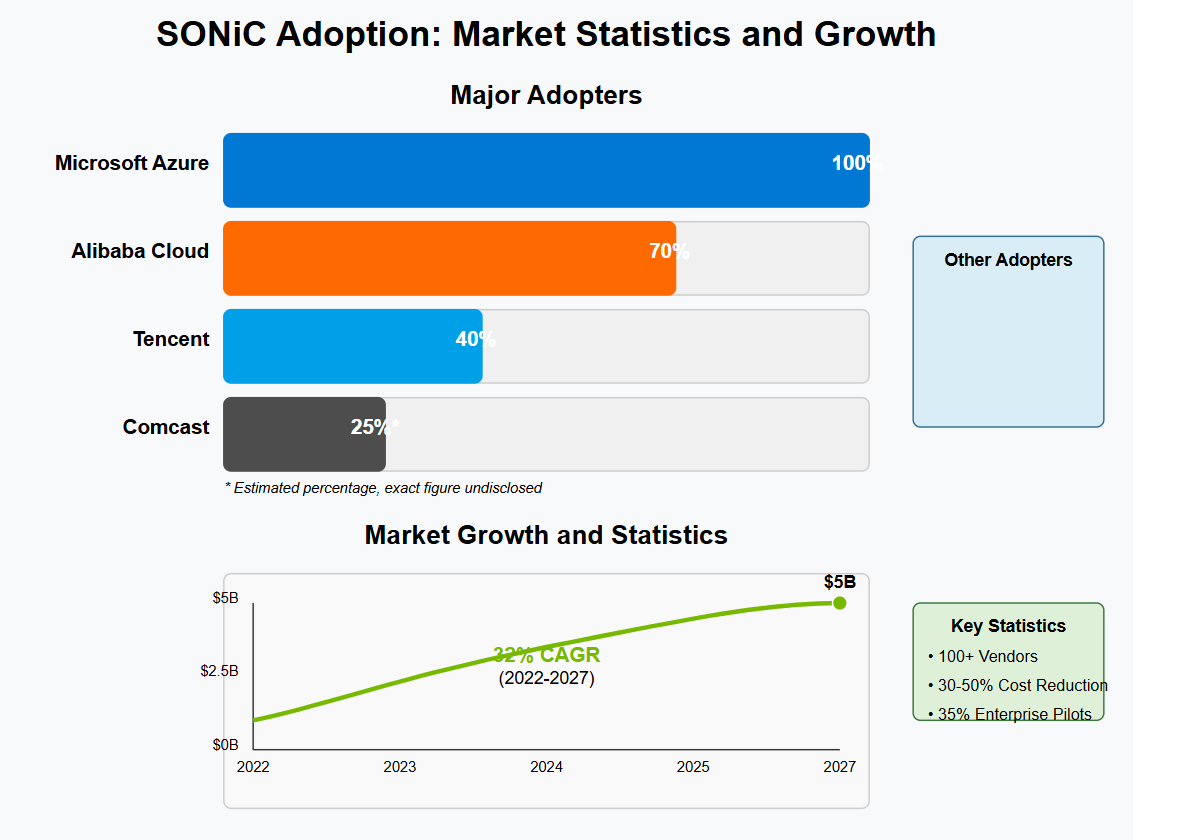

SONiC Adoption: Market Statistics and Growth

SONiC’s adoption is dominated by hyperscalers like Microsoft and Alibaba but is increasingly penetrating enterprises and edge networks. With a projected $5B market by 2027 and growing support from vendors like NVIDIA and Dell, SONiC is assured to redefine data center networking economics.

Major Adopters and Deployment Percentages

Microsoft Azure

Deployment: 100% of Azure’s data center switches run SONiC.

Details: Microsoft, SONiC’s founding contributor, migrated its entire Azure network to SONiC by 2020, citing improved automation and scalability.

Alibaba Cloud

Deployment: ~70% of its data center switches use SONiC.

Details: Alibaba announced in 2020 that SONiC powered the majority of its Ethernet switches, reducing operational costs by 50%.

Tencent

Deployment: ~30–40% of its data centers (as of 2022).

Details: Tencent uses SONiC for its containerized cloud infrastructure, emphasizing scalability for AI/ML workloads.

Comcast

Deployment: Partial adoption (exact % undisclosed).

Details: Comcast announced SONiC integration in 2022 to modernize its cable broadband infrastructure.

Other Adopters

AT&T, LinkedIn (Microsoft subsidiary), Baidu, eBay, and NVIDIA (for AI networking) also use SONiC in production. NVIDIA’s Cumulus Linux now integrates SONiC for GPU-driven workloads.

Market Statistics and Growth

Market Size: SONiC-controlled ports are projected to grow at a 32% CAGR (2022–2027), reaching $5 billion in annual revenue by 2027.

Adoption Drivers:

Cost Reduction: SONiC’s disaggregated model cuts CapEx/OpEx by 30–50%

Vendor Neutrality: Supported by 100+ vendors, including Arista, Dell, and Broadcom.

Edge and AI Use Cases: SONiC is expanding into edge data centers and AI/ML workloads (e.g., NVIDIA’s Spectrum switches).

Enterprise Growth:

Enterprise adoption is rising, with 35% of large enterprises piloting SONiC in 2023.

GitHub Activity: The SONiC GitHub repo has 5,000+ commits and 70+ contributors from companies like Google, Intel, and Meta.

Summary

Network hardware is not the most expensive items in a data center, the expensive items are servers and storage and yet we cannot fully utilize these most vital items because of network limitations described above. So, because of the network challenges cloud providers are not able to get the most out of their most valuable investment.

Networking business model is broken, why? Because it's proprietary, it's vertically integrated model. When you buy a router or switch from networking vendor, it's black box because it's running proprietary software on proprietary piece of hardware, and this is compared to the servers in the mainframe era where companies like IBM where running same business model before servers revolutionized and we have competition at the CPU level (AMD vs INTEL), coemption at the sever level (IBM, DELL, HPE, etc..), we have different flavors of open source operating system like Linux, we have windows server, while none of this is available in the networking industry. And that's why the networks were the real challenge in scaling cloud providers data centers.

The suggested solution was adopting merchant silicon (open silicon) that is hardware independent and open-source network operating system can run on top of these white boxes (routers and switches).

So, servers’ industry has moved on years ago, and now the same trend is happening in networking industry. And the trend has started once again by mega scalers (AWS, Azure, Google etc..) where they are adopting open silicon solutions with source network operating system (separating the hardware from software exactly as what happened in servers’ revolution), so the trend started by cloud providers and now it's getting popularity between service providers and enterprise customers.

Finally, in the merchant silicon market Broadcom is having the same status as for example what INTEL have in the x86 market. And in the operating system side SONiC OS is becoming the Linux of networking.