Linux 101 (Part 5): Command Pipelines

Learn Linux CLI the Right Way

Objective

In this Part 5, you'll discover how to combine Linux commands using pipes, one of the most powerful features of the command line. You'll learn how to take the output from one command and feed it directly into another, creating efficient command chains without temporary files. We'll explore practical techniques like saving command output to files while still using it in a pipeline, and handling commands that only accept arguments. By the end, you'll have the foundational skills to build time-saving command pipelines that can accomplish complex tasks with just a single line of text.

Understanding the cut Command

Before we dive into piping commands together, Let's learn about a command called “cut”. This command helps you take out specific pieces of text from a file or some text input.

Imagine you have a sentence like "The sky is blue today" and you only want the word "blue". The cut command can help you do exactly that!

What Does cut Do?

The cut command works by:

Splitting text into pieces based on a divider character (like a space or comma)

Picking out only the pieces you want and display that for you!

when dealing with cut command, we need to understand two important options (if you don’t know what options are yet, refer back to part 2) :

--delimiter (or -d for short): The delimiter is just a fancy word for "divider" - it's the character that separates your text into chunks.

cut --delimiter=" "In this example, we're telling cut to split the text wherever it finds a space. So "Hello World" would be split into "Hello" and "World".

Some common delimiters people use:

Space:

--delimiter=" "(splits at each space)Comma:

--delimiter=","(split at each comma, good for CSV files)Colon:

--delimiter=":"(split at each colon: used in many system files)

--fields (or -f for short): After the text is split by your delimiter,

--fieldstellscutwhich piece you want.

cut --fields=1This means "give me the first piece after splitting". Fields are counted starting at 1, not 0.

Let's Try a Real Example

Here's how to use cut with a real file:

# First, let's save today's date to a file

$ date > today.txt

# Let's look at what's in the file

$ cat today.txt

Mon Apr 8 15:30:45 EDT 2025

# Now let's get just the day of the week (Mon)

$ cut --delimiter=" " --fields=1 today.txt

MonWhat happened here? The date command gave us "Mon Apr 8 15:30:45 EDT 2025" and we redirect the output of the date command to be saved in file called today.txt (if you don’t know what stdout redirection means, go back and read part 4)

When we used cut with a space delimiter, it split the full date (Mon Apr 8 15:30:45 EDT 2025) into:

"Mon"

"Apr"

"8"

"15:30:45"

"EDT"

"2025"

And since we asked for field 1, it gave us just "Mon" back as an output on our terminal window.

Your First Linux Pipe: Connecting Commands Together

Now that you understand how the cut command works, let's learn about one of the most powerful features in Linux: the pipe.

What is a Pipe?

A pipe, written as the vertical bar symbol |, connects two commands together. It takes the output from the first command and sends it directly as input to the second command.

Think of it like a real pipe that water flows through:

The first command produces some output (water)

The pipe

|carries that outputThe second command receives that output as its input

Your First Pipe Command

Let's create a simple pipe using the “date” and “cut” commands:

date | cut --delimiter=" " --fields=1When you run this command, you'll see something like:

MonLet's break down what's happening step by step:

First Command:

dateThe

datecommand runs and prints the current date and timeFor example:

Mon Apr 8 15:30:45 EDT 2025Normally this would display on your screen

The Pipe:

|The pipe symbol captures that output

Instead of showing it on the screen, it sends it to the next command as input

Second Command:

cut --delimiter=" " --fields=1The

cutcommand receives the complete date and time text as an inputIt splits the text at each space (based on our option

--delimiter=" ")It extracts just the first field "Mon" (based on our option

--fields=1)It displays this result on your screen

Why is This Useful?

Without the pipe, you would need to:

Run the

datecommandSave its output to a file

Run the

cutcommand on that fileDelete the file when done

With the pipe, you do all this in one simple command! No temporary files needed.

Adding Redirection to Your Pipe Command

Now that you understand how pipes work, let's combine them with redirection to save your output to a file!

Saving Pipe Output to a File

Remember how we used the pipe to connect date and cut? Let's take that same command and save the result to a file called today.txt:

date | cut --delimiter=" " --fields=1 > today.txtWhen you run this command:

The

datecommand gets today's date and timeThe pipe

|sends that output tocutThe

cutcommand extracts just the day of the weekThe redirection operator

>saves that result totoday.txtNothing appears on your screen because the output went to the file

To see what was saved, use the cat command:

cat today.txtThis will show:

MonPlacement of the Redirection Operator

An interesting thing about redirection is that it doesn't matter where you place the > operator in your command - as long as it's after the command that produces the output you want to save.

All of these commands do exactly the same thing:

# At the end (most common placement)

date | cut --delimiter=" " --fields=1 > today.txt

# In the middle (unusual but works)

date | cut --delimiter=" " > today.txt --fields=1

# Before the options (unusual but works)

date | cut > today.txt --delimiter=" " --fields=1

# After the pipe directly (unusual but works)

date | >> today.txt cut --delimiter=" " --fields=1This works because the redirection operator > applies to the entire command that comes before it. Linux processes the command first, then handles the redirection.

Connecting Three Commands with Multiple Pipes

Now that you can connect two commands with a pipe and redirect the output to a file, let's take it up a step further! You can actually connect three, four, or even more commands in a row using multiple pipes.

Let's create a three-command pipeline using commands you already know:

date | cut --delimiter=" " --fields=1-3 | cut --delimiter=" " --fields=3When you run this command, you'll see something like:

8Breaking Down Our Three-Command Pipeline

Let's take this step-by-step:

First Command:

dateOutput:

Mon Apr 8 15:30:45 EDT 2025This full date and time output flows into the first pipe

Second Command:

cut --delimiter=" " --fields=1-3Input:

Mon Apr 8 15:30:45 EDT 2025(from the date command)Output:

Mon Apr 8(just the first three fields)This shortened output flows into the second pipe

Third Command:

cut --delimiter=" " --fields=3Input:

Mon Apr 8( output from the previous cut command is now our input)Output:

8(just the day number because we specified that in cut options)This final result displays on your screen

This is like running these commands separately in steps:

date > temp1.txt

cut --delimiter=" " --fields=1-3 temp1.txt > temp2.txt

cut --delimiter=" " --fields=3 temp2.txtBut with pipes, you don't need any temporary files! Everything flows smoothly from one command to the next.

Understanding the Problem with Redirection in Pipes

Let's talk about an important limitation when using redirection with pipes. Imagine you want to:

Save the complete date output to a file

AND ALSO send that same output to the

cutcommand

You might try something like this:

date > fulldate.txt | cut --delimiter=" " --fields=1But when you run this, something unexpected happens - the cut command doesn't receive any input! Why?

Redirection Takes Priority Over Pipes

Here's the key thing to understand: redirection (>) happens BEFORE piping (|).

The

datecommand runs and produces outputThe redirection

> fulldate.txtcaptures ALL of that output and sends it to the fileNothing is left to go through the pipe to the

cutcommandThe

cutcommand receives no input, so it produces no output

It's like putting a bucket at the end of a water hose. Once the water goes into the bucket, there's nothing left to flow elsewhere!

Introducing the tee Command: The Perfect Solution

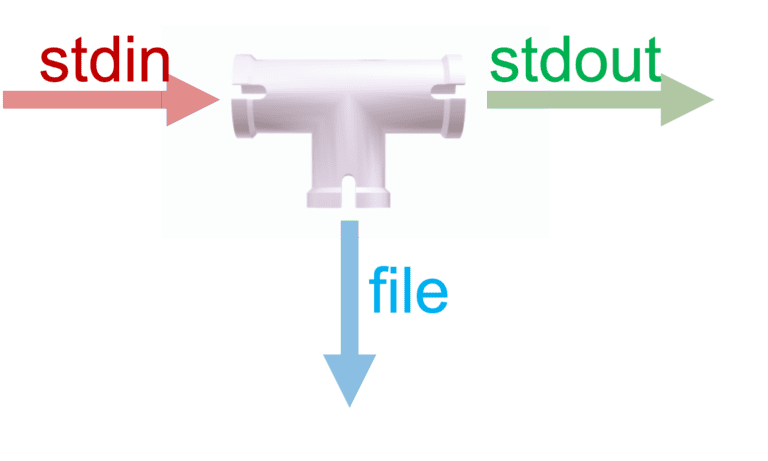

This is where the tee command comes into the picture! The “tee” command is named after the T-shaped pipe fitting in plumbing, which splits water in two directions.

What Does tee Do?

Takes input from a pipe (stdin = standard input)

Makes a copy of that input and saves it to a file (like taking a snapshot of input)

ALSO sends that same input forward through a pipe

It's like a special pipe fitting that both fills your bucket AND lets water continue flowing downstream.

Basic Syntax of tee

tee [options] [filename]Common options: -a: Append to the file instead of overwriting it

Let's now solve our original problem with tee command:

date | tee fulldate.txt | cut --delimiter=" " --fields=1The

datecommand produces output:Mon Apr 8 15:30:45 EDT 2025The

teecommand:Saves a copy of this output to

fulldate.txtALSO passes the same output to the pipe

The

cutcommand receives the date output and extracts just the day:Mon

On your screen, you'll see:

MonAnd if you check the file, you'll see:

cat fulldate.txt

Mon Apr 8 15:30:45 EDT 2025Now that you understand how the tee command works, let's put your knowledge to the test with a mini challenge!

Starting with this command:

date | tee fulldate.txt | cut --delimiter=" " --fields=1Your task is to modify it so that:

The full date is still saved to

fulldate.txt(usingtee)The day of the week (Mon, Tue, etc.) is saved to a new file called

today.txt(instead of being displayed on screen)

Think about how you can combine what you've learned about pipes, the tee command, and redirection to accomplish this.

Give it a try before looking at the solution below!

.

.

Solution:

Here's how to solve the challenge:

date | tee fulldate.txt | cut --delimiter=" " --fields=1 > today.txtFinal reminder: When working with pipes and redirection, always remember that redirection takes priority over pipes. This is a critical concept to understand

Commands That Don't Accept Standard Input: Introducing “xargs”

After learning about pipes and redirections, you might think that all Linux commands can work with standard input. But there's a surprise waiting for you!

Not all commands accept standard input! Some commands only accept command line arguments, and will completely ignore anything you try to pipe into them.

Let's see this problem in action:

# Try piping date output to echo

date | echoWhen you run this, you'll see:

Wait, what happened? You just see a blank line! The date command ran, but echo didn't show its output. Why?

The Problem: Commands That Only Accept Arguments

The echo command is designed to only work with command line arguments (the text you type after the command). It completely ignores standard input from pipes!

This is like trying to pour water into a cup that has no opening at the top - the water just spills everywhere instead of going in.

Other commands that behave this way include:

rm(for removing files)mkdir(for creating directories)cp(for copying files)And more!

The Solution: xargs

This is exactly where the xargs command comes to the rescue! Think of xargs as a translator that converts piped data into command line arguments.

date | xargs echoNow when you run this, you'll see:

Mon Apr 8 15:30:45 EDT 2025Success! The xargs command took the output from date and turned it into a command line argument for echo, and echo sends the result back to your terminal!

What Actually Happened?

Let's break down what's happening:

Without xargs:

date | echodateproduces:Mon Apr 8 15:30:45 EDT 2025echoignores this input and just prints a blank line

With xargs:

date | xargs echodateproduces:Mon Apr 8 15:30:45 EDT 2025xargstakes this output and converts it to argumentsIt's essentially running:

echo Mon Apr 8 15:30:45 EDT 2025echoprints:Mon Apr 8 15:30:45 EDT 2025

It's like xargs is typing the piped input as arguments for you!

Summary

Now you have a complete set of tools for connecting Linux commands:

Piping connects STDOUT (Standard Output) of one command to the STDIN (Standard Input) of another command

Pipes (

|) for commands that accept standard inputRedirection of STDOUT breaks pipelines

To save data “snapshot” without breaking pipelines, use the

teecommandteefor saving data while continuing the pipelineIf a command doesn’t accept STDIN, buy you want to pipe to it, use xargs

xargsfor commands that only accept argumentsCommands you use with xargs can still have their own arguments

Final Thoughts

You've just learned how to connect multiple commands with pipes, and you might be thinking, "This is neat, but why should I care about getting just the day number from a date?"

Right now, these examples might seem simple - even a bit trivial. Getting the day number or turning a month name to uppercase isn't going to change your life.

But here's the secret: What you're learning now is the foundation for incredibly powerful workflows later on.

Here are some powerful examples from various fields showing how pipes save time and solve complex problems:

Data Analysis and Reporting

# Extract all email addresses from a large text file and count unique domains

grep -E -o "\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Za-z]{2,6}\b" contacts.txt | cut -d@ -f2 | sort | uniq -c | sort -nrThis one-liner finds email patterns, extracts just the domain portions, sorts them, counts unique occurrences, and sorts by frequency - all without intermediate files.

Document Processing

# Extract text from all PDFs in a directory, create a searchable index

find . -name "*.pdf" | xargs pdftotext | grep -i "important topic" | tee search_results.txt | wc -lThis processes potentially hundreds of PDF files, extracts their text, searches for specific terms, saves the matches, and counts how many were found.

# Generate a table of contents from markdown headings

grep "^##" document.md | sed 's/^## //' | nl -w2 -s". " | tee toc.txtThis extracts headings from a document, formats them with numbers, and creates a clean table of contents.

So while your current examples may seem simple, you're building foundational skills that will pay off later. The humble pipe (|) is one of the most powerful features in Linux, and learning to use it effectively is what separates casual users from power users.

As you learn more commands and practice connecting them together, you'll soon be creating pipelines that can accomplish in seconds what might take hours to do manually.